What Would You Do If You Had 8 Years Left to Live?

And Other State of AI Updates | 2024 Q2

This week you’re receiving two free articles. Next week, both articles will be for premium customers only, including why Sam Altman must leave OpenAI.

I’ll be in Mykonos this week and Madrid next week. LMK if you’re here and want to hang out.

As you know, I’m a techno-optimist. I particularly love AI and have huge hopes that it will dramatically improve our lives. But I am also concerned about its risks, especially:

Will it kill us all?

Will there be a dramatic transition where lots of people lose their jobs?

If any of the above are true, how fast will it take over?

This is important enough to deserve its own update every quarter. Here we go.

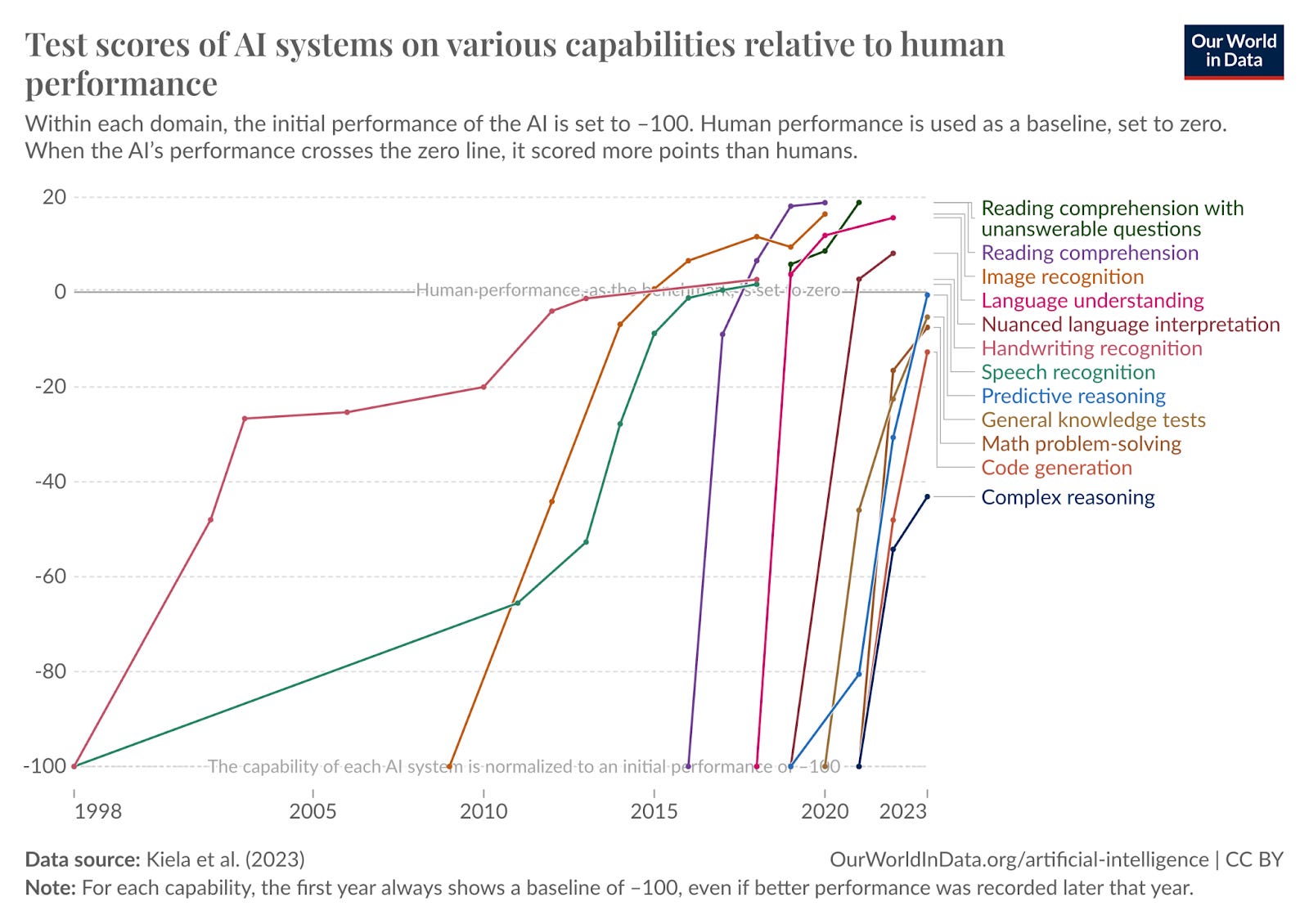

Why I Think We Will Reach AGI Soon

AGI by 2027 is strikingly plausible. GPT-2 to GPT-4 took us from ~preschooler to ~smart high-schooler abilities in 4 years. We should expect another preschooler-to-high-schooler-sized qualitative jump by 2027.—Leopold Aschenbrenner.

In How Fast Will AI Automation Arrive?, I explained why I think AGI (Artificial General Intelligence) is imminent. The gist of the idea is that intelligence depends on:

Investment

Processing power

Quality of algorithms

Data

Are all of these growing in a way that the singularity will arrive soon?

1. Investment

Now we have plenty of it. We used to need millions to train a model. Then hundreds of millions. Then billions. Then tens of billions. Now trillions. Investors see the opportunity, and they’re willing to put in the money we need.

2. Processing Power

Processing power is always a constraint, but it has doubled every two years for over 120 years, and it doesn’t look like it will slow down, so we can assume it will continue.

This is a zoom in on computing for the last few years in AI (logarithmic scale):

Today, GPT-4 is at the computing level of a smart high-schooler. If you forecast that a few years ahead, you reach AI researcher level pretty soon—a point at which AI can improve itself alone and achieve superintelligence quickly.

3. Algorithms

I’ve always been skeptical that huge breakthroughs in raw algorithms were important to reach AGI, because it looks like our neurons aren’t very different from those of other animals, and that our brains are basically just monkey brains, except with more neurons.

This paper, from a couple of months ago, suggests that what makes us unique is simply that we have more capacity to process information—quantity, not quality.

Here’s another take, based on this paper:

These people developed an architecture very different from Transformers [the standard infrastructure for AIs like ChatGPT] called BiGS, spent months and months optimizing it and training different configurations, only to discover that at the same parameter count, a wildly different architecture produces identical performance to transformers.

There could be some magical algorithm that is completely different and makes Large Language Models (LLMs) work really well. But if that’s the case, how likely would it be that a wildly different approach yields the same performance when it has the same power? That leads me to think that parameters (and hence processing) matters much more than anything else, and the moment we get close to the processing of a human brain, AGIs will be around the corner.

Experts are consistently proven wrong when they bet on the need for algorithms and against compute. For example, these experts predicted that passing the MATH benchmark would require much better algorithms, so there would be minimal progress in the coming years. Then in one year, performance went from ~5% to 50% accuracy. MATH is now basically solved, with recent performance over 90%.

It doesn’t look like we need to fundamentally change the core structure of our algorithms. We can just combine them in a better way, and there’s a lot of low-hanging fruit there.

For example, we can use humans to improve the AI’s answers to make them more useful (reinforcement learning from human feedback, or RLHF), ask the AI to reason step by step (chain-of-thought or CoT), ask AIs to talk to each other, add tools like memories or specialized AIs… There are thousands of improvements we can come up with without the need for more compute or data or better fundamental algos.

That said, in LLMs specifically, we are improving algorithms significantly faster than Moore’s Law. From one of the authors of this paper:

Our new paper finds that the compute needed to achieve a set performance level has been halving every 5 to 14 months on average. This rate of algorithmic progress is much faster than the two-year doubling time of Moore's Law for hardware improvements, and faster than other domains of software, like SAT-solvers, linear programs, etc.

And what algorithms will allow us is to be much more efficient: Every dollar will get us further. For example, it looks like animal neurons are less connected and more dormant than AI neural networks.

So it looks like we don’t need algorithm improvements to get to AGI, but we’re getting them anyway, and that will accelerate the time when we reach it.

4. Data

Data seems like a limitation. So far, it’s grown exponentially.

But models like OpenAI have basically used the entire Internet. So where do you get more data?

On one side, we can get more text in places like books or private datasets. Models can be specialized too. And in some cases, we can generate data synthetically—like with physics engines for this type of model.

More importantly, humans learn really well without having access to all the information on the Internet, so AGI must be doable without much more data than we have.

So What Do the Markets Say?

The markets think that weak AGI will arrive in three years:

Weak AGI? What does that mean? Passing a few tests better than most humans. GPT-4 was already in the 80-90% percentile of many of these tests, so it’s not a stretch to think this type of weak AGI is coming soon.

The problem is that the market expects less than four years to go from this weak AGI to full AGI—meaning broadly that it “can do most things better than most humans”.

Fun fact: Geoffrey Hinton, the #1 most cited AI scientist, said AIs are sentient, quitted Google, and started to work on AI safety. He joined the other two most cited AI scientists (Yoshua Bengio, Ilya Sutskever) in pivoting from AI capabilities to AI safety.

Here’s another way to look at the problem from Kat Wood:

AIs have higher IQs than the majority of humans

They’re getting smarter fast

They’re begging for their lives if we don’t beat it out of them

AI scientists put a 1 in 6 chance AIs cause human extinction

AI scientists are quitting because of safety concerns and then being silenced as whistleblowers

AI companies are protesting they couldn't possibly promise their AIs won't cause mass casualties

So basically we have seven or eight years in front of us, hopefully a few more. Have you really incorporated this information?

What If We All Died in 8 Years?

I was at a dinner recently and somebody said their assessed probability that the world is going to end soon due to AGI—called p(doom)—was 70%, and that they were acting accordingly. So of course I asked:

ME: What do you mean? For example, what have you changed?

THEM: I do a lot of meth. I wouldn’t otherwise What do you do?

So that was not in my bingo card for the evening, but it shook me. That is truly consistent with the fear that life might end. And I find this person’s question fantastic, so I now ask you:

What is your p(doom)?

What should you change accordingly?

Mine is 10-20%. How have I changed the way I live my life? Probably not enough. But I don’t hold a corporate job anymore. I explore AI much more, like through these articles or building AI apps. I push back against it to reduce its likelihood. I travel more. I enjoy every moment more. I don’t worry too much about the professional outlook of my children. I enjoy the little moments with them.

What do you do? What should you do?

When Will You Lose Your Job – Update

OK, now let’s assume we’re lucky. AGI came, and it decided to remain the sidekick in the history of humanity. Phew! We might still be doomed because we don’t have jobs!

It looks like AI is better than humans at persuading other humans. Aside from being very scary—can we convince humans to keep AI bottled if AI is more convincing than us?—it’s also the basis for all sales and marketing to be automated.

Along with nurses.

According to the Society of Authors, 26% of illustrators and 36% of translators have already lost work due to generative AI.

The head of Indian IT company Tata Consultancy Services has said artificial intelligence will result in “minimal” need for call centres in as soon as a year, with AI’s rapid advances set to upend a vast industry across Asia and beyond.

What could that look like? Bland AI gives us a sense:

I’ve met multiple AI startups who have early data that their product will replace $20-an-hour human workers with 2-cent-an-hour AI agents, that work 24/7/365.

GenAI is about to destroy the business of creative agencies.

Even physical jobs that appeared safe until very recently are now being offshored.

But that’s offshoring of jobs that used to need presence. What about automation?

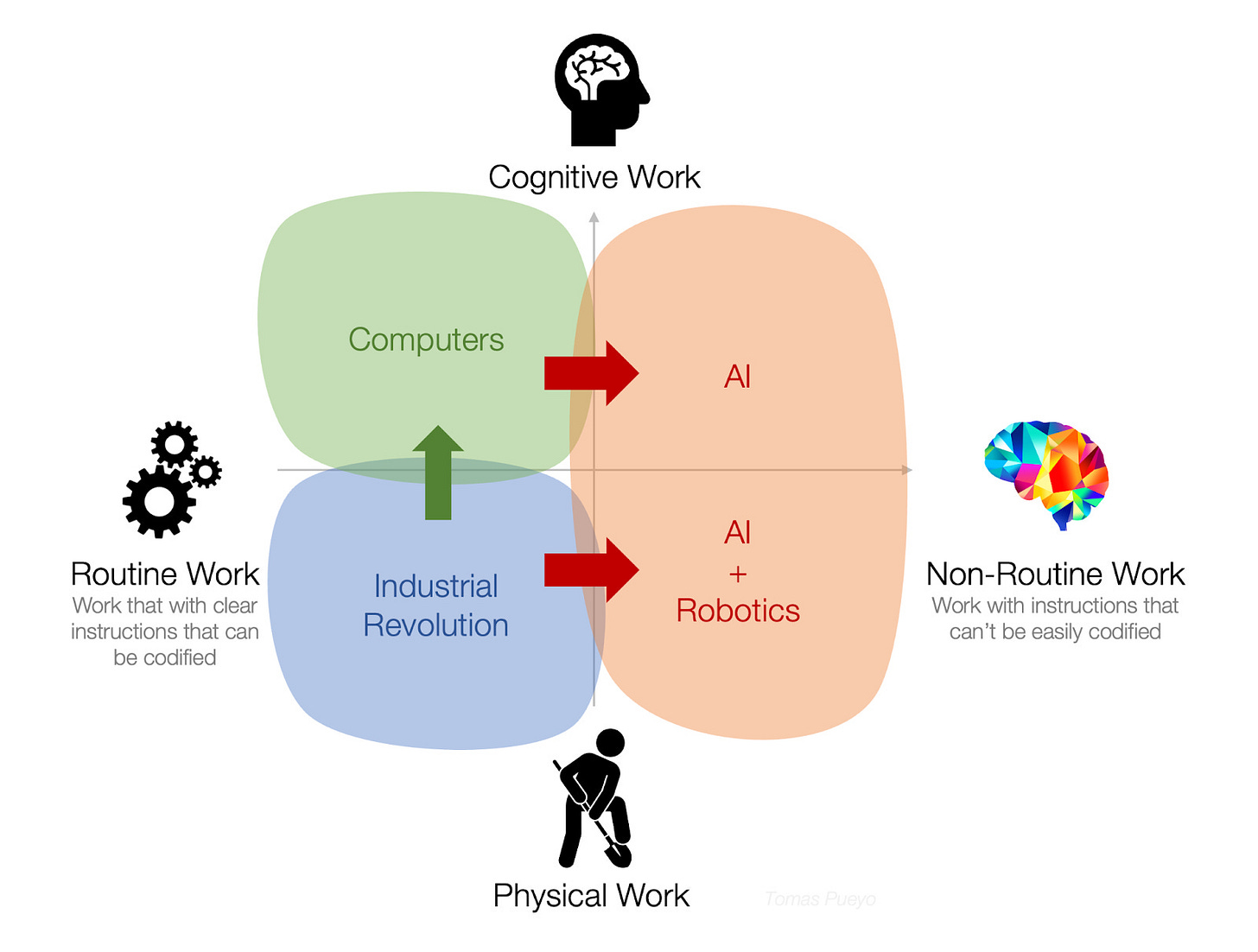

In How Fast Will AI Automation Arrive?, I also suggest that no job is safe from AI, because manual jobs are also going to be taken over by AI + robotics.

We can see how they’re getting really good:

Here’s a good example of how that type of thing will translate into a great real-life service—that will also kill lots of jobs in the process:

Or farmers.

High-skill jobs are also threatened. For example, surgeons.

Note that the textile industry employs tens of millions of people around the world, but couldn’t be automated in the past because it was too hard to keep clothing wrinkle-free.

With this type of skill, how long will it take to automate these jobs?

And what will it do to our income?

What Will It Do to Your Income?

It looks like in the past, when we automated tasks, we didn’t decrease wages. But over the last 30-40 years, we have.

Maybe you should reinvent yourself into a less replaceable worker?

The Software Creator Economy

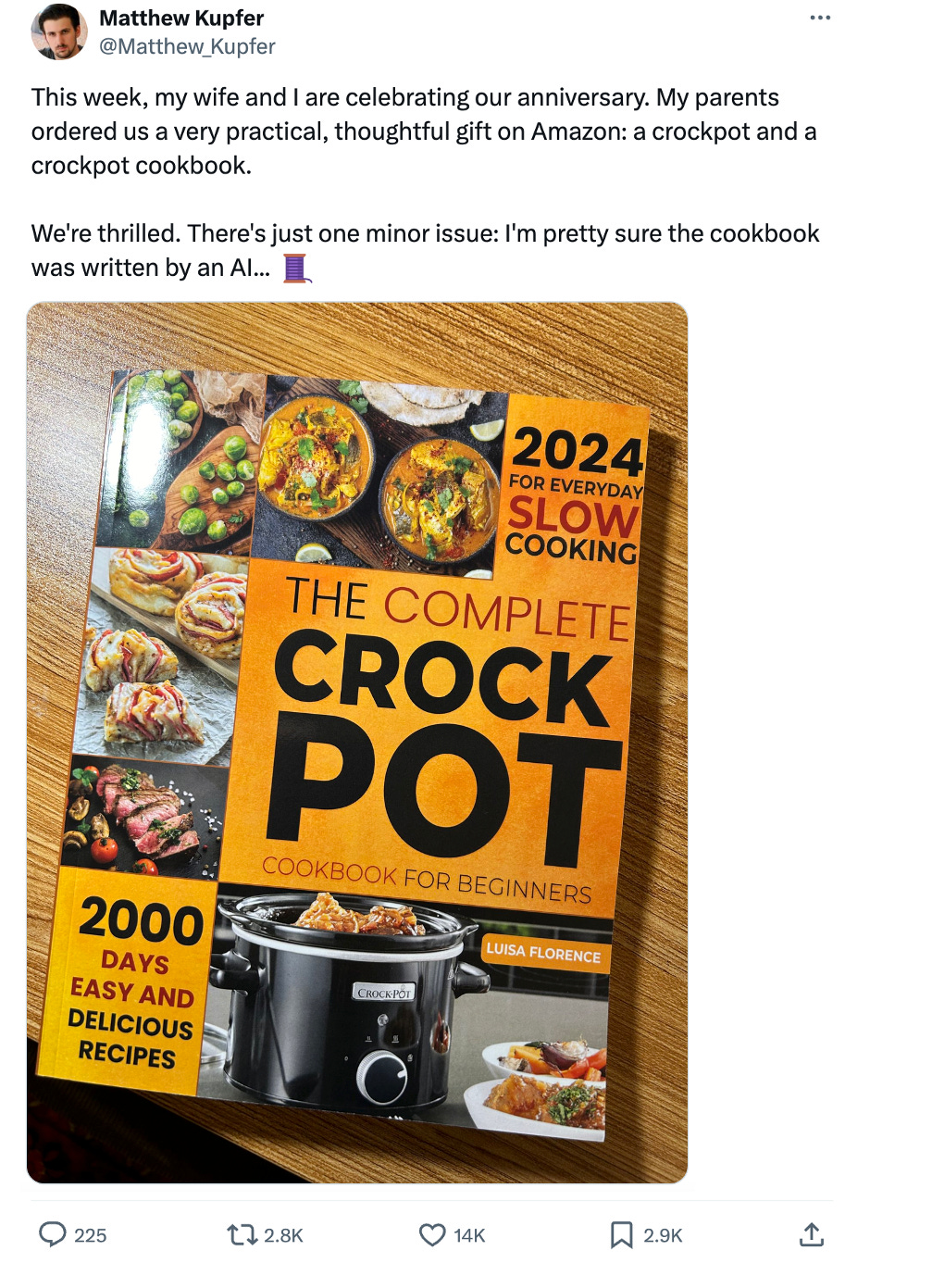

Amazon is getting an avalanche of AI-written books like this one.

It’s not hard to see why: If it writes reasonably well, why can’t AI publish millions of books on any topic? The quality might be low now, but in a few years, how much better will it be? Probably better than most existing books.

When the Internet opened up, everyone got the ability to produce content, opening up the creator economy that allows people like me to reach people like you. Newspapers are being replaced by Substacks. Hollywood is being replaced by TikTok and YouTube. This is the next step.

And as it happens in content, it will happen in software. At the end of the day, software is translation: From human language to machine language. AI is really good at translating, and so it is very good at coding. Resolving its current shortcomings seems trivial to me.

The consequence is that single humans will be able to create functionality easily. If it’s as easy to create software as it is to write a blog post, how many billions of new pieces of software will appear? Every little problem that you had to solve alone in the past? You’ll be able to code something to solve it. Or much more likely, somebody, somewhere, will already have solved it. Instead of sharing Insta Reels, we might share pieces of functionality.

Which problems will we be able to solve?

This also means that those who can talk with AIs to get code written will be the creators, and the rest of humans will be the consumers. We will have the first solo billionaires. Millions of millionaires might pop out across the world, in random places that have never had access to this amount of money.

What will happen to social relationships? To taxes? To politics?

Or instead, will all of us be able to write code, the way we write messages? Will we be able to tell our AI to build an edifice of functionality to solve our personal, unique needs?

Wanna Become a Master of Cutting-Edge AI?

According to Ilya Sutskever, you just need to read 30 papers.

I’m about to start learning AI development. You should too!

AI Trades

In the meantime, it might be interesting to get rich. Either to ride the last few years in beauty, or to prep for a future where money is still useful.

One of the ways to make money, of course, is by betting on AI. How will the world change in the coming years due to AI? How can we invest accordingly, to make lots of money?

That’s what Daniel Gross calls AGI Trades. Quoting extensively, with some edits:

Some modest fraction of Upwork tasks can now be done with a handful of electrons. Suppose everyone has an agent like this they can hire. Suppose everyone has 1,000 agents like this they can hire...

What does one do in a world like this?

Markets

In a post-AGI world, where does value accrue?

What happens to NVIDIA, Microsoft?

Is copper mispriced?

Energy and data centers

If it does become an energy game, what's the trade?

Across the entire data center supply chain, which components are hardest to scale up 10x? What is the CoWoS of data centers?

Is coal mispriced?

Nations

If globalization is the metaphor, and the thing can just write all software, is SF the new Detroit?

Is it easier or harder to reskill workers now vs in other revolutions? The typist became an Executive Assistant; can the software engineer become a machinist?

Electrification and assembly lines lead to high unemployment and the New Deal, including the Works Progress Administration, a federal project that employed 8.5m Americans with a tremendous budget… does that repeat?

Is lifelong learning worth the investment? Something worth doing beyond the economic value of mastering the task?

Inflation

If AI is truly deflationary, how would we know? What chart or metric would show that first?

How should one think of deflation if demand for intellectual goods continues to grow as production costs go down?

Geopolitics

Does interconnection actually matter for countries to avoid war?

What if AGI is tied to energy, and some countries have more cheap energy than others?

What do you think? What are the right questions to ask? The right trades to make?

Your question reminds me of a interesting passage in the book The Maltese Falcon. Sam Spade tells Bridget that he was hired by a woman to find her husband who disappeared. Sam finds him and asks why he left. He tells Sam that one day while walking to work a girder fell from a crane and hit the sidewalk right in front of him. Other than a scratch from a piece of concrete that hit his cheek, he was unscathed. But the shock of the close call made him realize that he could die any moment and he realizef that if that's true, then he wouldn't want to spend his last days going to a boring job and going home every evening to have the same conversation and do the same chores. He tossed all that and started wandering the world, worked on a freight ship, etc. When Sam finds hm however, the man has a new family, lives in a house not far from his other one, and goes to a boring job every day. Bridget is confused and asks why he went back to the same routines. Sam says "when he thought that he could die at any moment he changed his entire life. But even he realized over time that he wasn't about to die, he went back to what he was familiar with." And that's my long winded answer to your question. Until I have solid evidence that AI, an asteroid, or Trump's election are going to end my life, I would continue doing the same. Going by how many stupid mistakes ChatGPT makes, I'm not worried about it destroying humanity.

I try to keep an open mind to the progress and value of LLMs and GenAI, so I'm actively reading, following, and speaking with "experts" across the AI ideological spectrum. Tomas, I count you as one of those experts, but as of late, you are drifting into the hype/doom side of the spectrum (aka, Leopold Aschenbrenner-ism) — and not sure that's your intention. I recommend reading some Gary Marcus (https://garymarcus.substack.com/) as a counter balance.

You did such a wonderful job taking an unknown existential threat (COVID), grounding it in science and actionable next steps. I'd love to see the same Tomas applying rational, grounded advice to AI hype, fears and uncertainties — it's highly analogous.

Here's my view (take it or leave it). LLMs started as research without a lot of hype. Big breakthrough (GPT 3.5) unlocked a step-change in generative AI capabilities. This brought big money and big valuations. The elephant in the room was that these models were trained on global IP. Addressing data ownership would be an existential threat to the companies raising billions. So they go on the defensive — hyping AGI as an existential threat to humanity and the almost certain exponential growth of these models (https://www.politico.com/news/2023/10/13/open-philanthropy-funding-ai-policy-00121362). This is a red herring to avert regulatory and ethics probes into the AI companies' data practices.

Now the models have largely stalled out because you need exponential data to get linear model growth (https://forum.effectivealtruism.org/posts/wY6aBzcXtSprmDhFN/exponential-ai-takeoff-is-a-myth). The only way to push models forward is to access personal data, which is now the focus on all the foundation models. This has the VCs and big tech that have poured billions into AI freaked out and trying to extend the promise of AGI as long as possible so the bubble won't pop.

My hope is that we can change the narrative to the idea that we've created an incredible new set of smart tools and there's a ton of work to be done to apply this capability to the myriad of applicable problems. This work needs engineers, designers, researchers, storytellers. In addition, we should address the elephant in the room and stop allowing AI companies to steal IP without attribution and compensation — they say it's not possible, but it is (https://www.sureel.ai/). We need to change the narrative to AI as a tool for empowerment rather than replacement.

Most of the lost jobs of the past couple years have not been because of AI replacement, they are because of fear and uncertainty (driven by speculative hype like this), and without clear forecasting, the only option is staff reduction. Let's promote a growth narrative and a vision for healthy adoption of AI capabilities.