AI in 2026

Note: the last article updating on robotaxis (from two days ago) has new data that changes the conclusions. Read it here (premium).

Things are moving so fast in AI, and there’s so much to digest, that it’s hard to write a good article on it, but that’s why it’s so important. Here’s my update on the most important trends right now, to cover who might win the AI bubble, how fast progress is going, how we’re doing on AI self-improvement, the emergence of consciousness, and the impact on jobs.

Next up in AI:

Can algorithms keep improving?

Blockers to AI progress

Can we make AIs aligned to our goals, or will they kill us all?

How should we manage a world after AGI?

Is AI changing our culture?

At night, a group of friends is drinking and laughing at the bar, with its warm light flooding the street. They are enjoying their Friday night together, as they have every week for over a decade.

On the other side of the street, behind metal detectors, security passes, gated doors, and closed rooms, another group of people is huddled around a computer screen. They’re all still, listening as the AI researcher has an existential crisis:

They are summoning God from silicon. From fucking sand! The world is about to be completely upended! Nothing will be the same! Will we survive? I don’t know! Will I have a job? I don’t know! Will we be rich enough to enjoy life? I don’t know! How many planets will the great great grand-children of Sam Altman and Dario Amodei and Elon Musk own? What should we do? What if it’s not aligned? Do we really know? It’s so nice, but is it really? Or is it faking it so we release it and it turns against us? Are we really going to fucking release this thing into the wild?

Silence falls over the room and the group, murmuring, slowly disperses. One of the engineers takes his badge, passes the three security gates, and leaves the building. He looks to the other side of the street and thinks:

They have no idea.

AI Bubble?

AI is now so big that its mental structure can be seen from space:

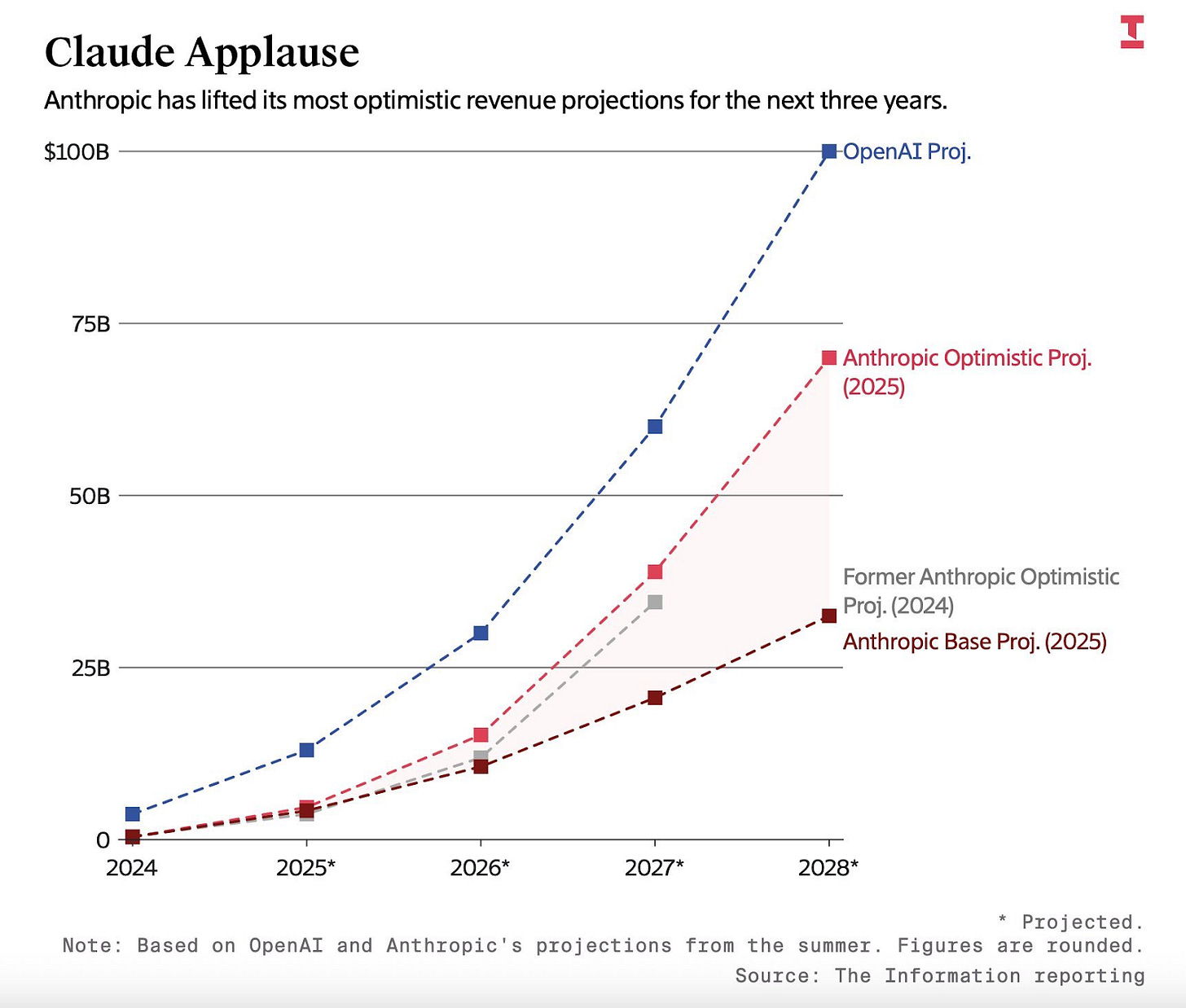

These are the revenue projections for OpenAI and Anthropic:

Anthropic’s optimistic forecasts are higher than the most optimistic ones from 2024!

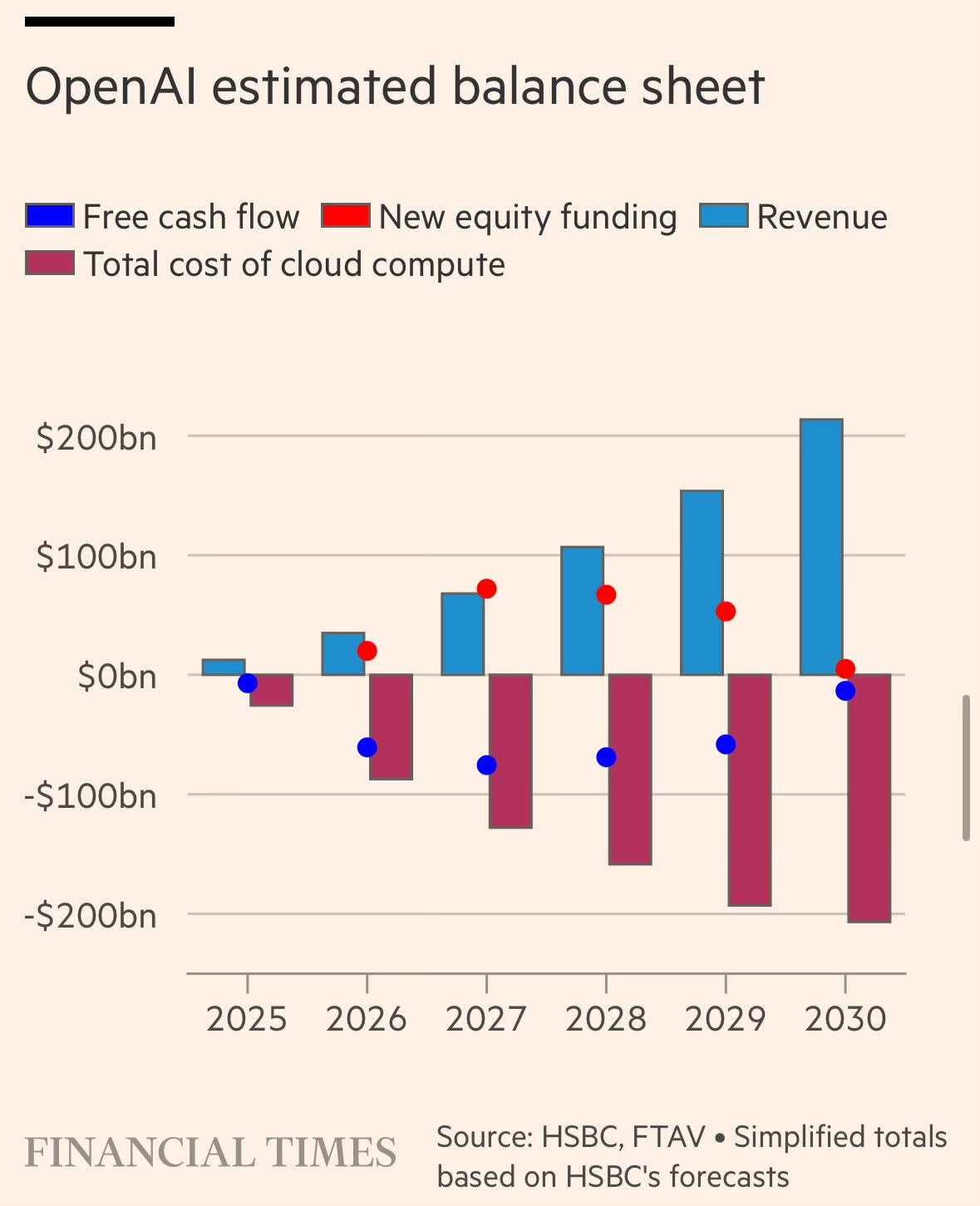

And look at OpenAI’s! $100B in revenue! As a result, it’s planning this in terms of spending and investments:

OpenAI expects its cloud spending to reach hundreds of billions per year! It will have to raise tens of billions of dollars every year until 2030, when free cash flow might finally compensate for the cost of cloud compute. Staggering.

The danger, though, is that a lot of this demand is subsidized. A task might cost them a few dollars but nothing to you. This only works if our demand for AI keeps increasing and their costs decreasing. Will it?

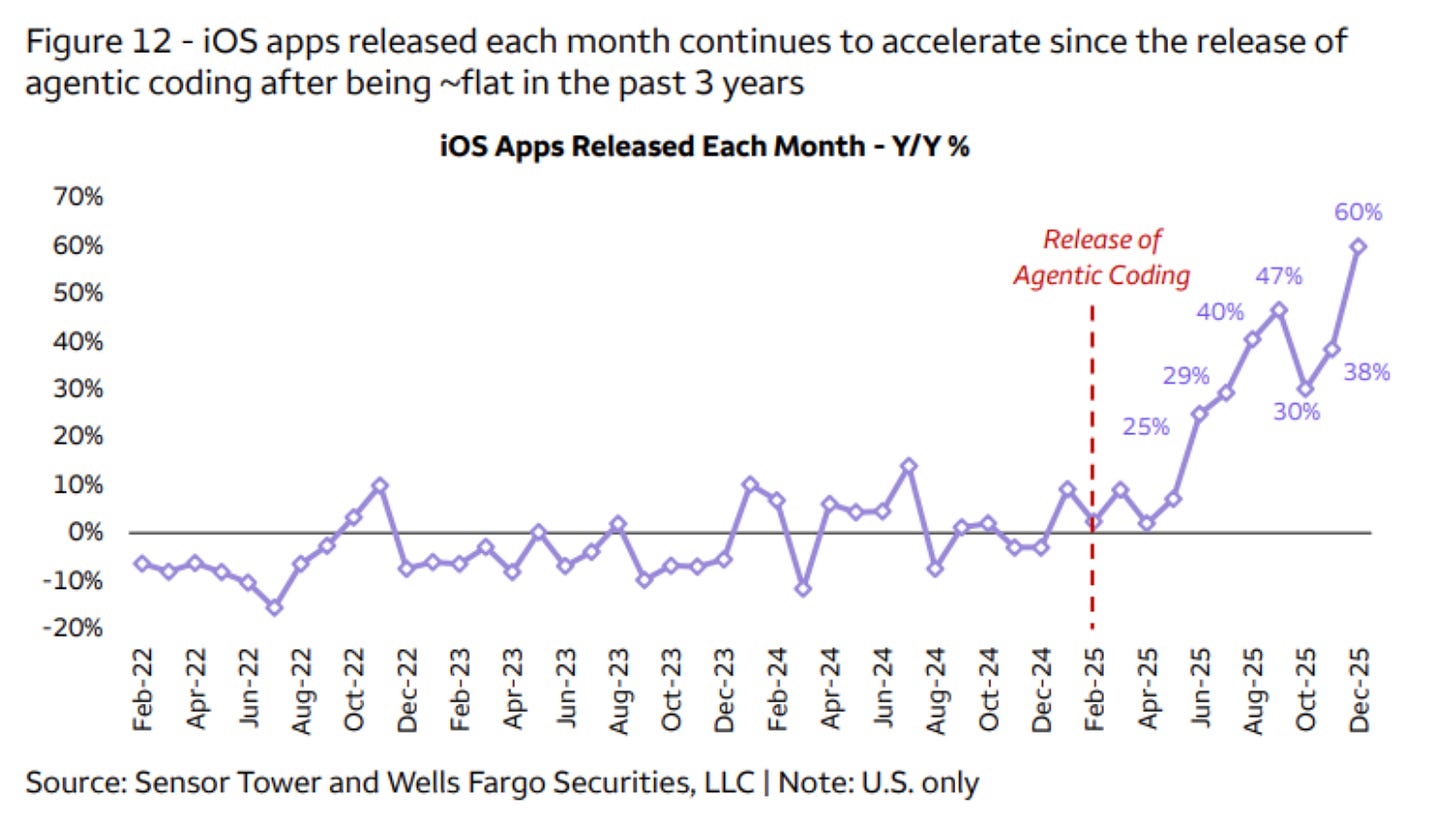

Builders are certainly using it like never before to create more and more apps.

Who Wins in the AI Bubble?

In 2014, Google purchased 40,000 NVIDIA GPUs at $130M to power their first AI workloads. However, they quickly recognized the high cost of running those AI workloads at scale, so they hired a highly talented team to develop TPUs, focusing on making them significantly more performant and cost-effective for AI workloads compared to GPUs. This is the real sustainable first-mover advantage in the AI race that investors should focus on.—Source.

This is one of the reasons I don’t have all my money invested in NVIDIA.1 I can see the demand continuing to grow tremendously, but I can’t see NVIDIA always being in the near-monopoly situation it has been in until now. More on this when we discuss potential blockers in a future article.

Rate of Progress

The key is the rate of progress. It’s been running very fast, but for how long can it be maintained?

In the article about compute, I explained how compute has been progressing by 10x every two years, and must continue doing so. And here we are: The new NVIDIA architecture, Vera Rubin, reduces token (“thinking”) costs by 10x over the previous architecture (Blackwell) and training costs by 4x.

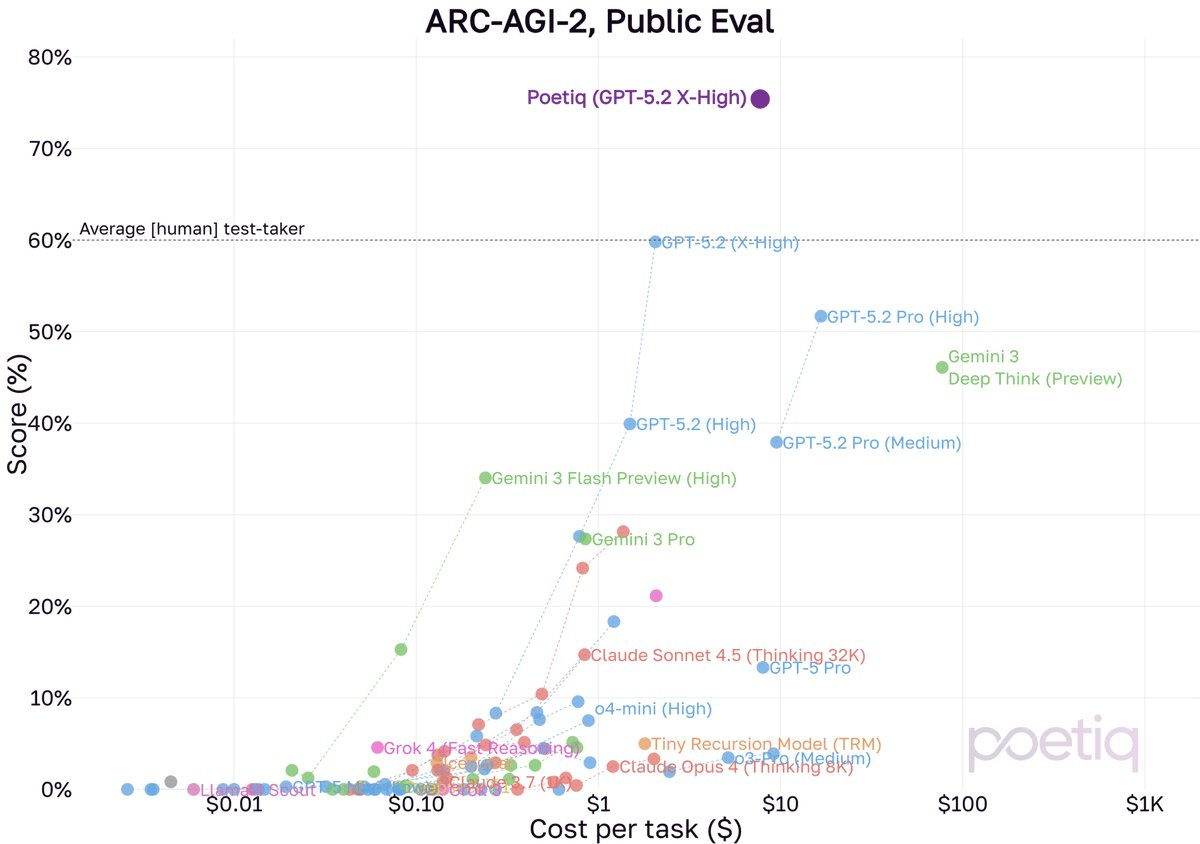

As a result of this and algorithmic improvements, models keep improving at an incredible rate. The cost per task has shrunk by 300x in one year, and scores on the very hard ARC-AGI-2 test2 are now up from below 20% a year ago, to 45% in November, to 55% now.

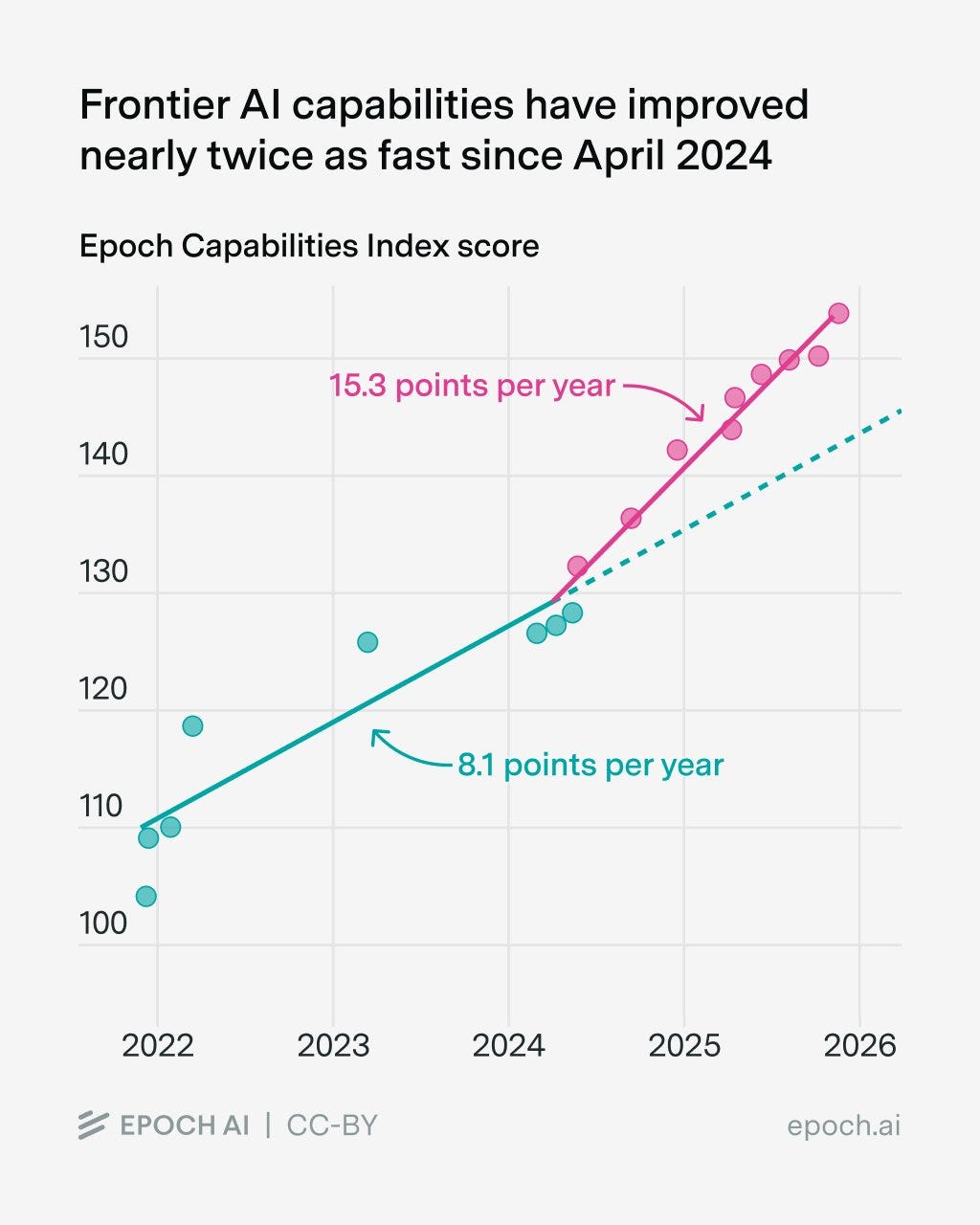

Frontier AI capabilities have not just continued progressing. Over the last 2 years, they’ve accelerated!

The applications are crazy. This elite astrophysicist observed how ChatGPT was able to match his level in an obscure, unpublished question.

AI now solves math problems that were previously unsolved.

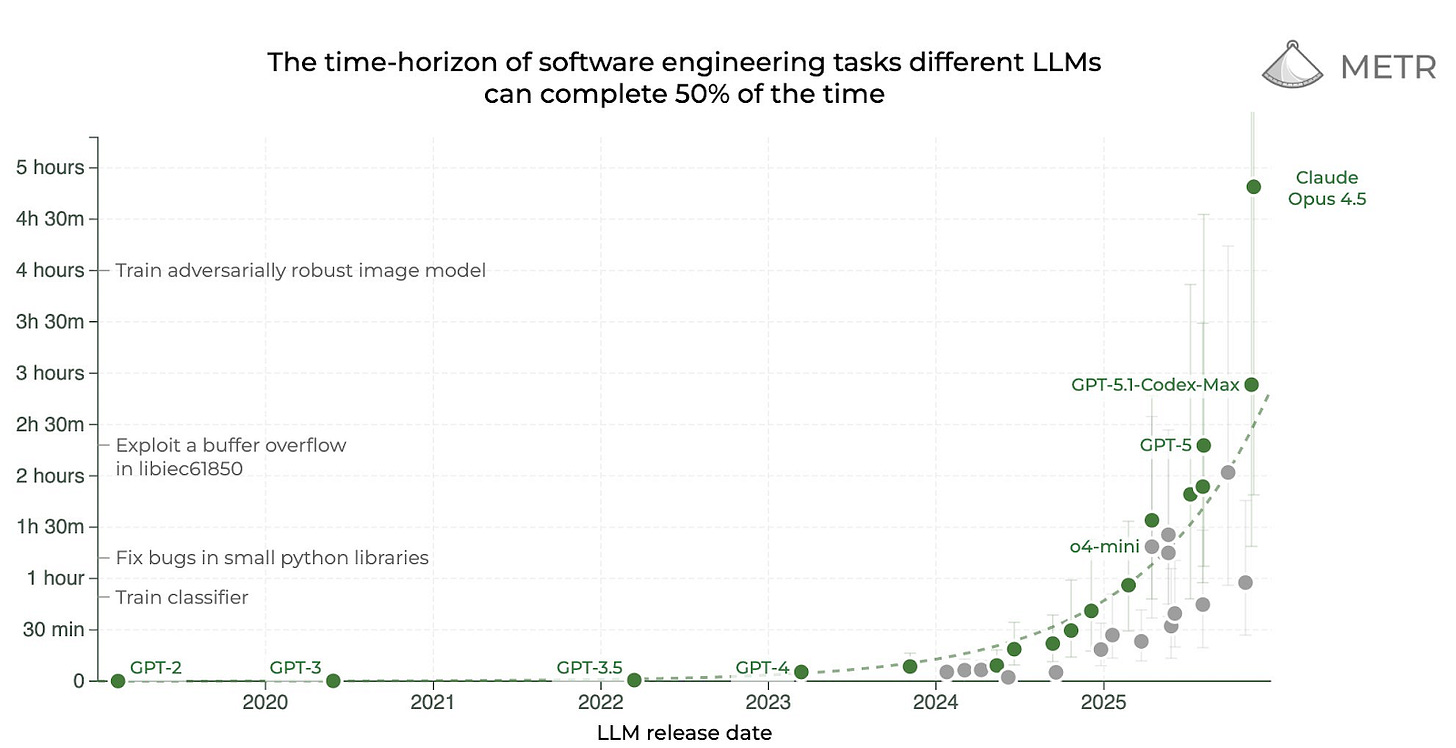

In Is There an AI Bubble?, I highlighted how important it is to make sure error rates decline to near zero to reach AGI, because then you can chain infinite numbers of reasoning steps, effectively becoming a superintelligence. And how a good benchmark for this is the length of tasks with 50% error rates. This is what they look like right now:

But can we do better than 50% mistakes after 5h tasks? This paper claims so. It explains how to solve a million-step task with zero errors. It achieves this by breaking down the task into very tiny ones and using a series of agents voting on the solutions to these microtasks to ensure quality. The reliability comes from the process, not the model—which was a cheaper, older version of ChatGPT.

But one AI stands out, and it might well be AGI.